With a pandemic sweeping the country, an economy on the brink of collapse, and the future of society hanging in the balance, 2020 is officially ruined. But does that mean there’s nothing salvageable?

Last month, Google announced its OSS release of kpt, tooling used internally by the search giant since 2014. In a nutshell, kpt facilitates the creation, cross-team and cross-org sharing, and management of bundles of Kubernetes manifests that go into an application deployment. To date this task has been handled by DSL’s like Helm which package these manifests as so-called Charts. However, as a packaging solution provided by the creators of Kubernetes, it’s hard to imagine how Kpt won’t capture serious attention as a first-class packaging solution.

In this article, we won’t focus so much on the nitty-gritty of how kpt works (the official Google blog post announcement and user guide go into more detail on this front), but rather on a practical example of its usage followed by an analysis of its strengths, weaknesses, and production-readiness.

A rundown of Kpt

What is kpt?

From the official announcement post, kpt is an “…OSS tool for Kubernetes packaging, which uses a standard format to bundle, publish, customize, update, and apply configuration manifests.” A bit of a mouthful. In a more condensed form, kpt introduces a structured way to create and share bundles of manifests that can be modified as appropriate for a given use case.

Still a bit too head in the clouds for comprehension. Let’s take the concrete example of my agency MeanPug Digital (shameless pug). We often build and deploy Django applications for clients and over time have learned what resources and configurations are optimal for dev, staging, and production environments. Rather than copying and pasting our Kubernetes manifests between projects which has the disadvantage of entropy, reduction of specialization, possibility of errors, and barriers to rolling upgrades, we can use kpt to define a common set of manifests then modify these as appropriate for each specific use-case. This is more concrete, but still not concrete enough. We’ll cover the deployment of a toy nodeJS app via a kpt configured bundle in the next section.

Getting started with Kpt

Before we move on to the application, you’ll need to make sure you have kpt installed. Personally, because I’m not a masochist, I used homebrew for installation. The official kpt installation guide has pretty straightforward instructions for all you cool cats and kittens though.

Kpt Example Application and Workflow

The source code for this application and all kpt configuration is available in our github repo

Today we’ll be deploying a toy (and by toy I mean “hello world”) express app to a Kubernetes cluster via configuration defined in a kpt bundle. One important point to note is that for the purpose of this demo, all kpt and application source are defined in the same repository. The kpt “published” templates and “consumed” templates have been separated into separate directories marked as – creatively – “published” and “consumed”, but a more realistic flow involves having a set of common kpt bundles (your “published” ones) in a separate repo and only including consumed bundles in the application repo.

The application

Our toy application is as follows:

// src/index.js

const express = require('express');

const app = express();

const port = 3000;

app.get('/', (req, res) => res.send('Hello World!'));

app.listen(port, () => console.log(`Example app listening at http://localhost:${port}`));The Dockerfile

# Dockerfile

FROM node:12

WORKDIR /code

COPY package*.json ./

COPY src ./src

RUN npm install

Defining the published kpt package

Now the fun begins. To start, we use the kpt pkg init command to create an application bundle. Remember, this is the base bundle that we could pull in and apply to both the current app as well as any similar applications we might deploy. I’m intentionally glossing over what similar means, but for our purposes we take similar to mean any express web app. This interpretation is subject to opinion.

mkdir express-web

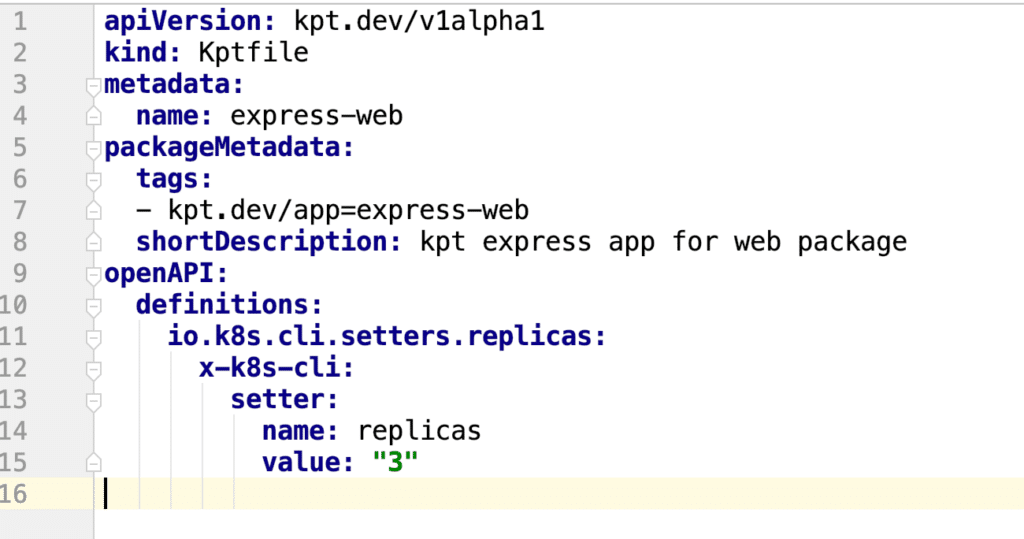

kpt pkg init express-web --tag kpt.dev/app=express-web --description "kpt express app for web package"and just like that a wild bundle appears in the express-web folder. You’ll notice we now have a Kptfile with some pretty bare metadata. As we parameterize our template with setters and substitutions this file will really start to fill out.

Inside our new express-web folder let’s add the basic manifests that make up our application deployment.

apiVersion: v1

kind: ConfigMap

metadata:

name: express-web-nginx-sites-configmap

labels:

meanpug.com/app: express-web

data:

express-web.conf: |

server {

listen 80;

server_name test-express.meanpugk8.com;

location / {

proxy_pass http://localhost:3000;

proxy_set_header Host $host;

}

}nginx configuration

apiVersion: apps/v1

kind: Deployment

metadata:

name: express-web-deployment

labels:

app: express-web

meanpug.com/app: express-web

spec:

replicas: 1

selector:

matchLabels:

app: express-web

template:

metadata:

labels:

app: express-web

spec:

volumes:

- name: sites-enabled

configMap:

name: express-web-nginx-sites-configmap

containers:

- name: nginx

image: nginx:1.14.2

volumeMounts:

- name: sites-enabled

mountPath: /etc/nginx/conf.d/

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 5

periodSeconds: 5

- name: express

image: meanpugdigital/kpt-demo:latest

command: ["node", "src/index.js"]

ports:

- containerPort: 3000

name: appapplication deployment

apiVersion: v1

kind: Service

metadata:

name: express-web-service

labels:

meanpug.com/app: express-web

spec:

type: NodePort

selector:

app: express-web

ports:

- protocol: TCP

port: 80

targetPort: 80service

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: express-web-ingress

labels:

meanpug.com/app: express-web

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: choo-choo.meanpug.com

http:

paths:

- path: /

backend:

serviceName: express-web-service

servicePort: 80

ingress

We’ve now got everything we need to deploy our app! But wait…there are some specs in our definitions that seem like they ought to be parameterized between applications. Off the bat, the following jump to mind:

- Each application should be able to scale up/down replicas as load demands.

- The application image and tag will definitely be different from app to app.

- Unless we want to serve every app from the same host, our nginx server names and ingress host value will need to be easily changeable.

Enter setters and substitutions.

Let’s start by making replica count a configurable option. We do this through the kpt cfg create-setter command. In our case, run it as such: kpt cfg create-setter express-web/ replicas 3 . Now check out your Kptfile.

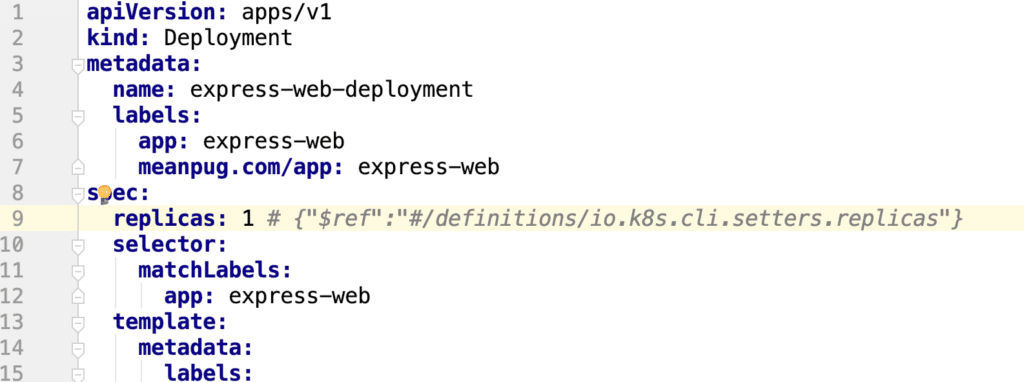

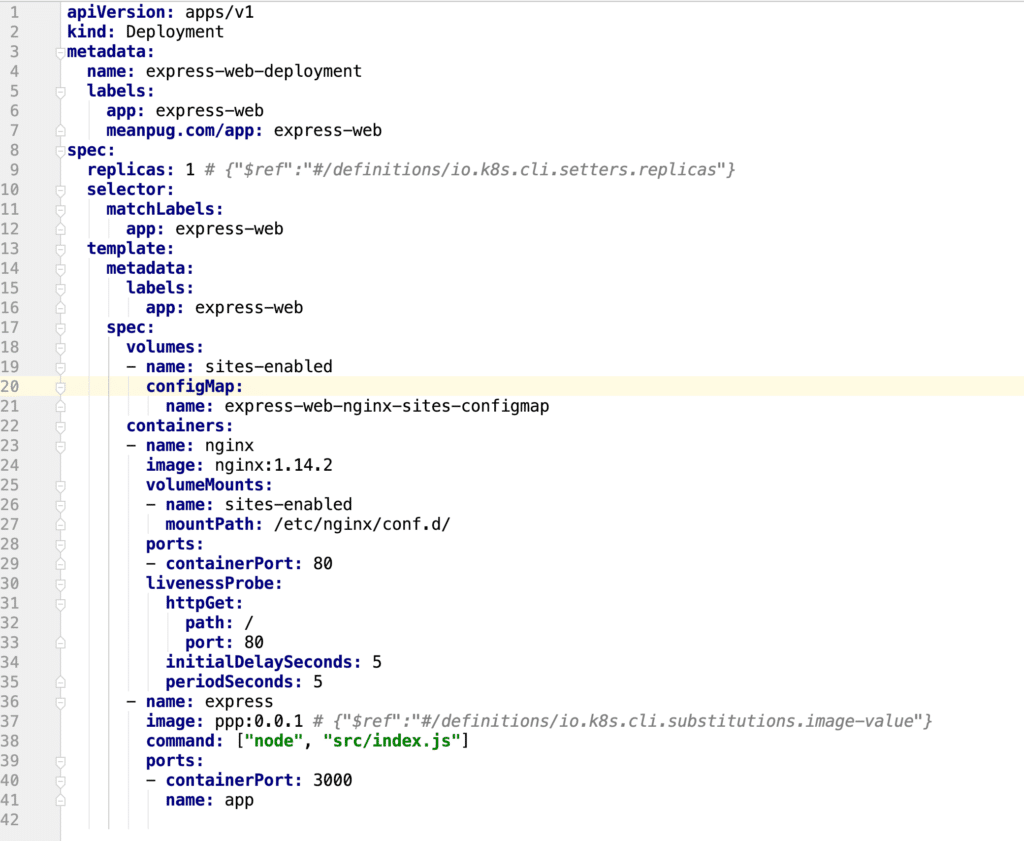

Pretty magical, huh? You should also see a line like replicas: 3 # {"$ref":"#/definitions/io.k8s.cli.setters.replicas"} appear in your deployment.yaml manifest, however I’ve sometimes seen this necessary line fail to inject which will require you to manually add the above. After this line has been added, the relevant section of your deployment should look like this:

We’ll dive into how these setters are used in the Consuming Kpt Packages section below, but as a sneak peek run kpt cfg set express-web replicas 2 and take a look at your Kptfile and deployment 🐶.

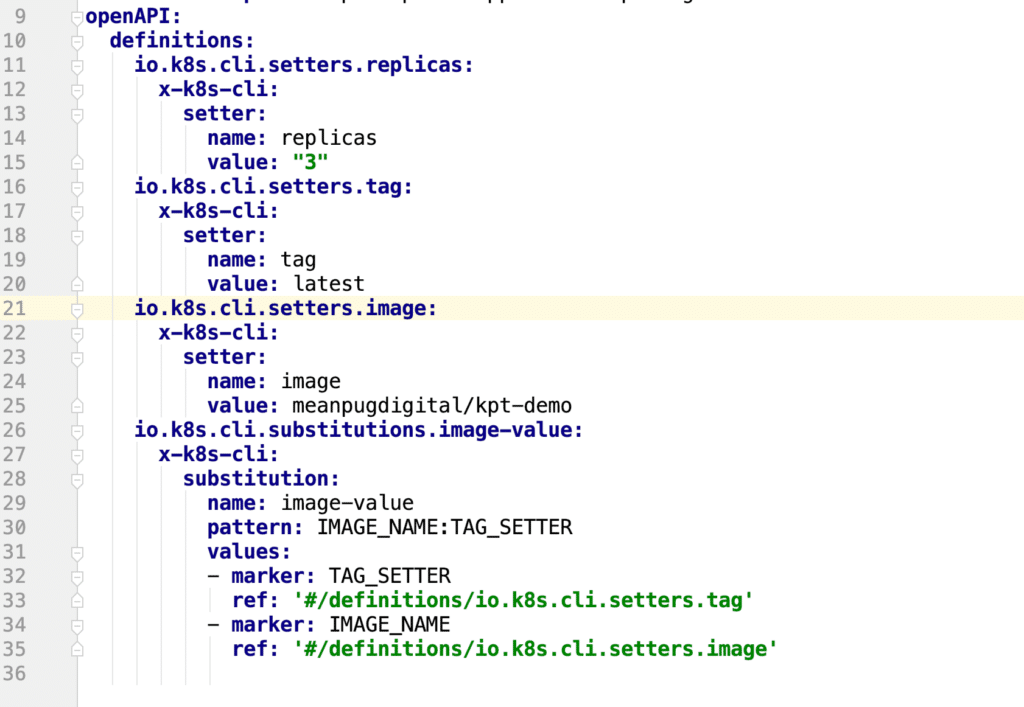

For our image tag and version, the process is a bit different as we use kpt substitutions instead of direct setters. After running the command kpt cfg create-subst express-web/ image-value meanpugdigital/kpt-demo:latest --pattern IMAGE_NAME:TAG_SETTER --value TAG_SETTER=tag --value IMAGE_NAME=image , we’ll notice that our Kptfile has 3 new definitions:

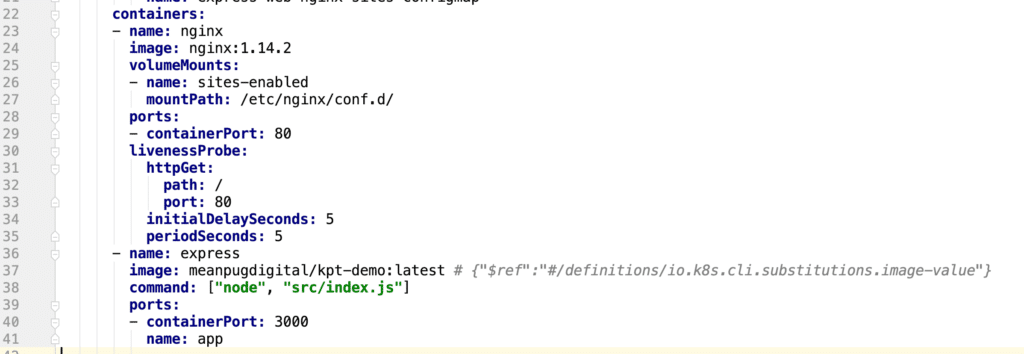

Notice the addition of the tag and image setters, as well as the new image-value substitution. Additionally, we should see in our deployment the presence of a # {"$ref":"#/definitions/io.k8s.cli.substitutions.image-value"} string.

Now, if we modify either the tag or image properties with our standard kpt cfg set , our deployment and Kptfile will perform the appropriate substitutions. We’ll dive into this in greater detail in a moment as we move into consuming our Kpt definitions.

Consuming and tweaking the published kpt package

Take a big breath as we switch context from publisher to consumer. Let’s put ourselves back in the concrete example of MeanPug Digital. We’ve now defined a common set of configuration for web apps built on the Express framework and we have a new client, let’s call them Puggle Professional Podiatrists, for which we’ve built a shiny new Express app. Time to deploy it with our curated kpt configuration. Important reminder: we’ve done all work in a single repo to this point. In reality, the published Kpt configuration from the last section would live in a separate repo which stores only our common Kpt packages.

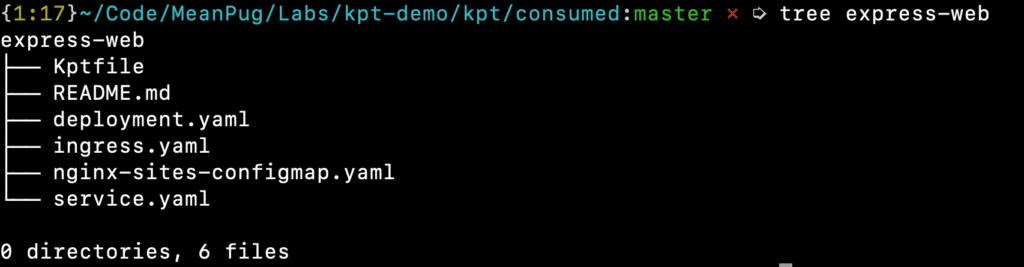

To start, we pull down the relevant app configuration with kpt get . In our case this looks like kpt pkg get https://github.com/MeanPug/kpt-demo/kpt/published/express-web express-web . Hey waddaya know, there’s that configuration we just had so much fun building:

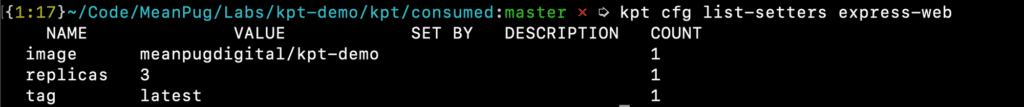

Let’s see what values are up for modification with kpt cfg list-setters express-web

Hey now, that looks familiar! Let’s update the image and tag to be representative of our new Puggle clients (they’re very touchy about pedigree) with kpt cfg set express-web/ image ppp and kpt cfg set express-web/ tag 0.0.1 .

our Puggle Professional Podiatrists Express Deployment

All that’s left now is to deploy our client application to the cluster.

Interlude: Pulling down package updates from upstream

Uh-oh, we totally forgot to add resource requests and limits to our pods. Big no-no. Too bad we already deployed 100’s of client apps with a faulty configuration. Looks like we’re going to have to go through each individually and re-deploy with the updated config. Sad face emoji <here>. Wait what’s that, is it kpt to the rescue?! It is indeed.

Kpt makes it easy to merge upstream changes into your kpt consumer packages. In fact, they make it so easy I’m going to take the lazy way out and leave it as an exercise for those dedicated souls who’ve reached here.

Deploying the application

Well, you’ve made it. Give yourself a big pat on the back, treat yourself to a beer, take a victory lap, or just keep reading. You’re almost there.

Our clients are literally barking for their new application and we’ve got all the pieces lined up for a rollout. In order to actually take our Kpt bundle live, we need to do just two things:

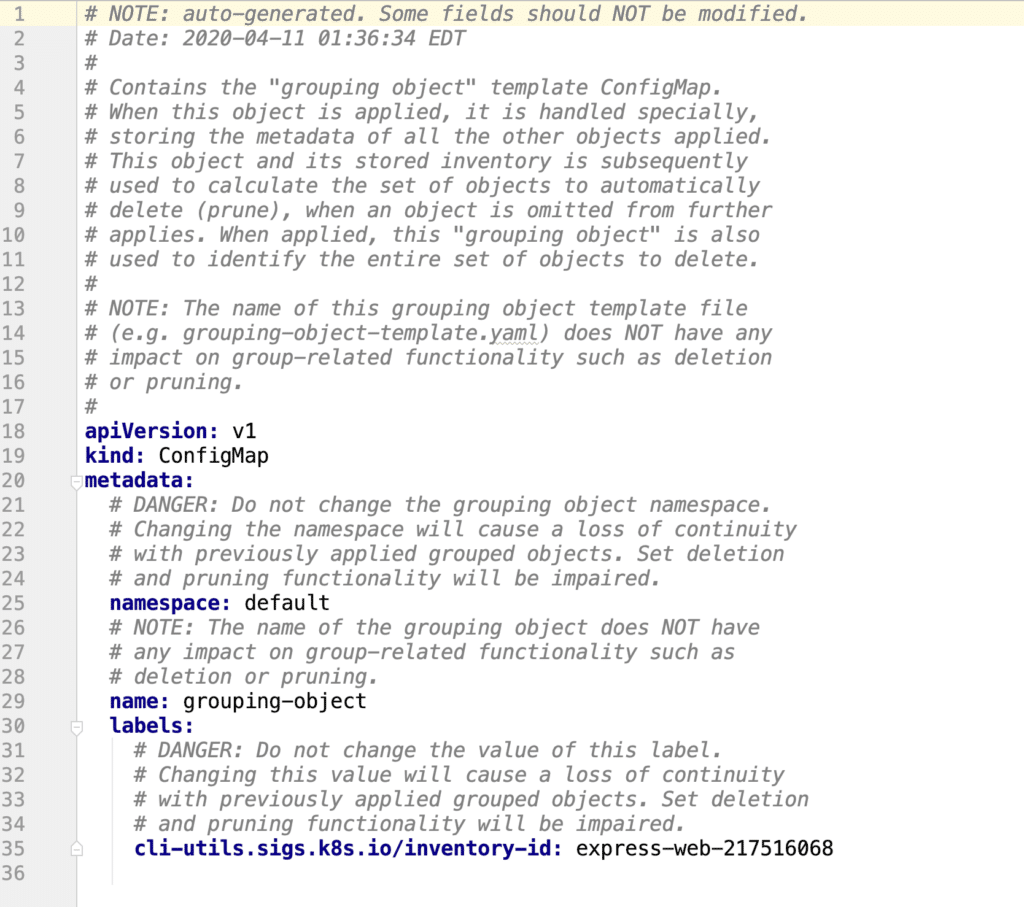

- Create what is called a grouping object (just a standard Kubernetes configmap with some metadata tracking previously applied resources)

- Use the

kpt livecommand to deploy our manifests.

To create the grouping object, run kpt live init express-web . This should place a grouping-object-template file in your kpt templates directory.

a generated grouping object

Finally, let’s get this sucker out to the world with kpt live apply express-web --wait-for-reconcile. 🐯

Our key points and takeaways

That was quite a journey, but this is a technology we’re really excited about at MeanPug. In a few sentences, here’s our rollup:

- Kpt is a young technology. Some functionality still feels clunky and buggy. Using

kpt cfg create-setterdoesn’t always setup the appropriate comments in relevant resource files, the documentation is rather sparse and hard to grok, and certain (under development) features like blueprints feel half-baked (understandably). - Helm isn’t going anywhere anytime soon. I imagine I wasn’t the only one in the Kubernetes community who thought “well, there goes Helm” upon announcement of Kpt, but that’s just not the case. Helm is a full-featured DSL, Kpt is more like a natural extension of Kubernetes. In fact, the kpt documentation is explicit in calling out Helm as a complement, not competitor, to kpt.

- A promising future. I’m really excited to see where kpt goes in the next 2-3 years. As more teams adopt Kubernetes, the need for tooling will only grow and with Google backing it, kpt is poised to live as a core tool in the arsenal of devops teams.

We ❤️ Kubernetes at MeanPug, drop a line anytime